Large Language Models (LLM) are artificial intelligence tools that can read, summarize and translate text, and predict upcoming words in a sentence, forming phrases and sentences that are very similar to those uttered by real people. These models are trained on large amounts of data, which means that the model can learn new abilities that are not always planned.

For example, adding more “training data” to a language model may unexpectedly result in it gaining the ability to translate between different languages, even though it was not trained to do so.

This kind of new abilities are called emergent abilities, i.e. abilities that pop up and are not necessarily planned for. As one research paper states: “Although there are dozens of examples of emergent abilities, there are currently few compelling explanations for why such abilities emerge in the way they do.”

Not even experts can explain why different abilities are learned, but it is well known that increasing the amount of training data allows a machine to learn more abilities.

On the flip side, the drawback of increasing the training data is that more computational power is required to produce an output, which makes the AI slower at the moment it’s generating text output – the moment called “inference time”.

As a result, increasing an AI’s intelligence with more data comes at the expense of making it slower at inference time.

Confident Adaptive Language Modeling

Researchers at Google have come up with an interesting solution to speed up language models while maintaining high performance levels.

To provide an analogy, the solution is comparable to the difference between responding to a simple question and solving a more difficult one. Answering an easy question, like “What color is the sky?” doesn’t need much thought. A hard question, however, forces you to pause and consider it further before giving an answer.

Large language models, in terms of computation, do not distinguish between the challenging and straightforward aspects of a text generation task. At the point of inference, they generate text using their full computing power for both the easy and difficult parts.

Google’s solution is dubbed Confident Adaptive Language Modeling (CALM).

What this new framework does better is devoting fewer resources to the trivial parts of a text generation task and devoting full power to the more difficult parts.

The research paper on CALM defines the problem and solution as follows:

Recent advances in Transformer-based large language models (LLMs) have led to significant performance improvements across many tasks.

These gains come with a drastic increase in the models’ size, potentially leading to slow and costly use at inference time.

In practice, however, the series of generations made by LLMs is composed of varying levels of difficulty.

While certain predictions truly benefit from the models’ full capacity, other continuations are more trivial and can be solved with reduced compute.

CALM works by dynamically allocating resources according to the complexity of each individual part of the task, employing an algorithm to determine whether something requires full or partial resources.

The researchers explain that they tested the new system for various natural language processing tasks (“text summarization, machine translation, and question answering”) and found that they were able to accelerate the inference by as much as 300 percent.

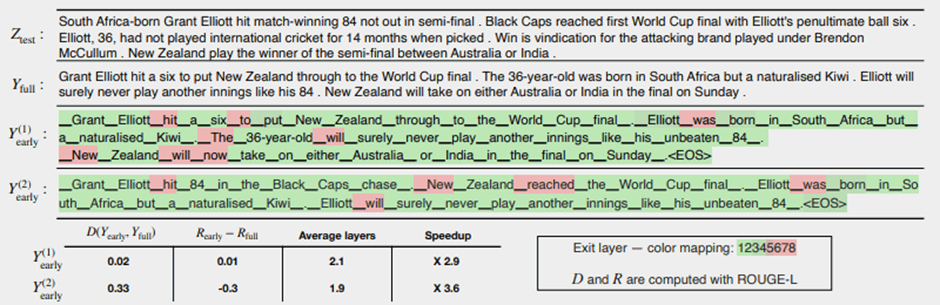

Figure 1 below shows the effectiveness of the CALM system. Several areas highlighted in red denote those parts of the task where the machine had to operate at full capacity. Green indicates where the machine utilized less than half its capacity.

The researchers note that implementing CALM requires only minimal modifications in order to adapt a large language model to become faster.

This research is significant because it paves the way for the development of more advanced AI models that can train on far larger data sets without experiencing slower speeds and compromising performance.

Still, it is possible that this method can also be used for large language models that are trained on a smaller amount of data. InstructGPT models, for instance, whose sibling model is ChatGPT, are trained on roughly 1.3 billion parameters, yet they still outperform models trained on substantially more parameters.

In conclusion, the researchers pointed out:

Overall, our complete adaptive compute framework for LMs requires minimal modifications to the underlying model and enables efficiency gains while satisfying rigorous quality guarantees for the output.

The information about this research paper was published on Google AI blog on December 16, 2022. The research paper itself is dated October 25, 2022.